The Role of Complex NLP in Transformers for Text Ranking

Introduction

-

What is the name of the BOW-BERT paper?

The Role of Complex NLP in Transformers for Text Ranking?

-

What are the main contributions of the BOW-BERT paper?

- Show that sequence order does not play a strong role in reranking with a BERT cross encoder

- Explain why it can still outperform BOW approaches

Method

-

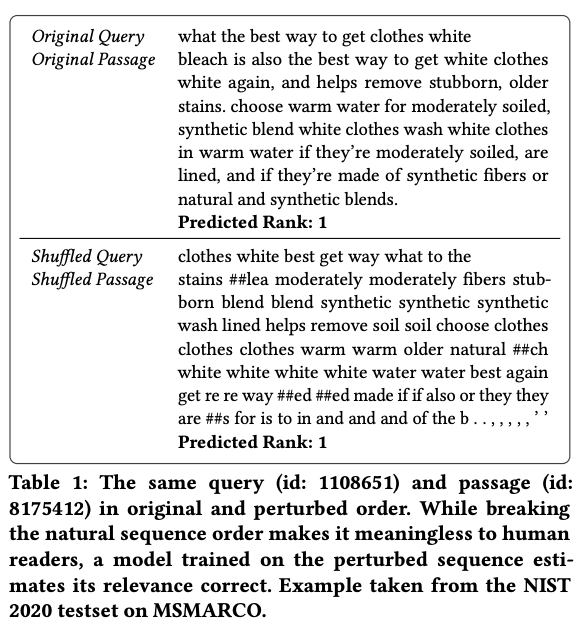

BOW-BERT shows that perturbing query and document word order

does not strongly impact results

Results

-

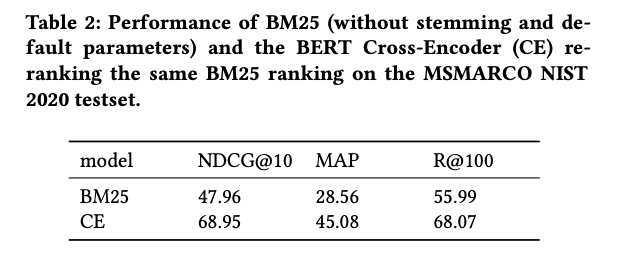

BOW-BERT removing positional embeddings leads to

small drop in MSMARCO performance -

BOW-BERT still outperforms

BM25Explanations:

- query-passage cross-attention

- richer embeddings that capture word meanings based on aggregated context regardless of the word order

-

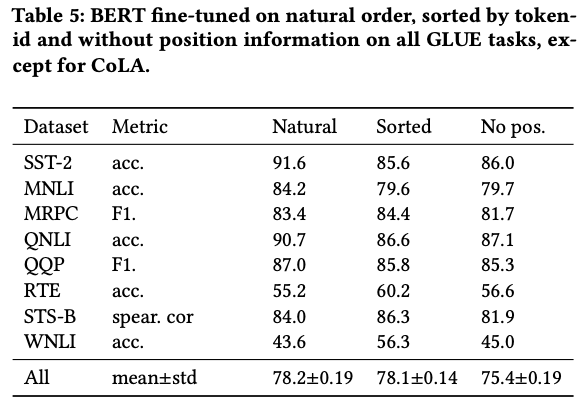

BERT performance on GLUE tasks with shuffled input shows

contextual encodings must encode some semantics

Conclusions

This is a good, basic research paper into properties of cross-encoders for information retrieval reranking. It would be interesting to repeat the same experiments for Biencoder information retrieval architectures.