UNDERSTANDING DIMENSIONAL COLLAPSE IN CONTRASTIVE SELF-SUPERVISED LEARNING

Introduction

-

What is the name of the DirectCLR paper?

UNDERSTANDING DIMENSIONAL COLLAPSE IN CONTRASTIVE SELF-SUPERVISED LEARNING

Li Jing, Pascal Vincent, Yann LeCun, Yuandong Tian, FAIR

- What are the main contributions of the DirectCLR paper?

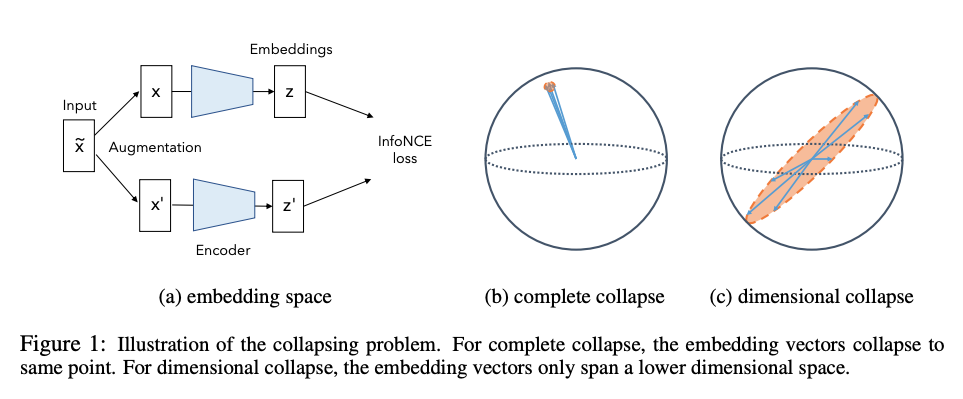

- show how contrastive self-supervised learning on images suffers from dimensional collapse

- mechanisms causing dimensional collapse

- Strong augmentation along feature dimension

- implicit regularization favoring low rank solutions

- Contrastive learning objective that outperforms SIMCLR

- Dimensional Collapse is where the embedding vectors end up

spanning a

lower-dimensional subspaceinstead of the entire available embedding space.- problem in both contrastive and noncontrastive self-supervised learning methods

Method

-

DirectCLR theory: dimensional collapse caused by

strong augmentationWith fixed matrix X (defined in Eqn 6) and strong augmentation such that X has negative eigenvalues, the weight matrix W has vanishing singular values.

-

DirectCLR theory: dimensional collapse caused by

implicit regularization -

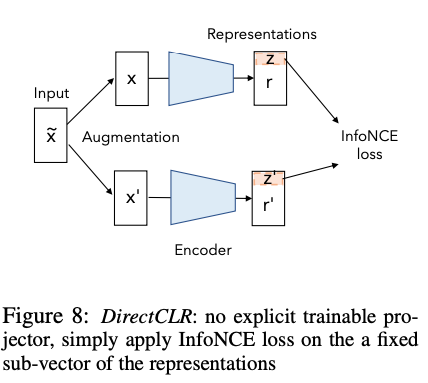

DirectCLR design: take a fixed

subvector of representationsProposition 1. A linear projector weight matrix only needs to be diagonal. Proposition 2. A linear projector weight matrix only needs to be low-rank.

Results

-

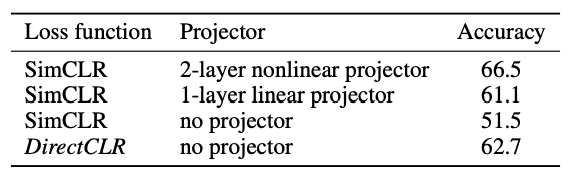

DirectCLR results: outperfroms

SIMCLRwith no learnable projector

Reference

@article{https://doi.org/10.48550/arxiv.2110.09348,

doi = {10.48550/ARXIV.2110.09348},

url = {https://arxiv.org/abs/2110.09348},

author = {Jing, Li and Vincent, Pascal and LeCun, Yann and Tian, Yuandong},

keywords = {Computer Vision and Pattern Recognition (cs.CV), Artificial Intelligence (cs.AI), Machine Learning (cs.LG), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Understanding Dimensional Collapse in Contrastive Self-supervised Learning},

publisher = {arXiv},

year = {2021},

copyright = {Creative Commons Zero v1.0 Universal}

}